Microsoft Agent Lightning introduces a breakthrough in AI learning, enabling systems to self-train intelligently without instability. Discover how this framework transforms AI performance, testing, and open-source development.

Microsoft Agent Lightning: A New Era in AI Learning

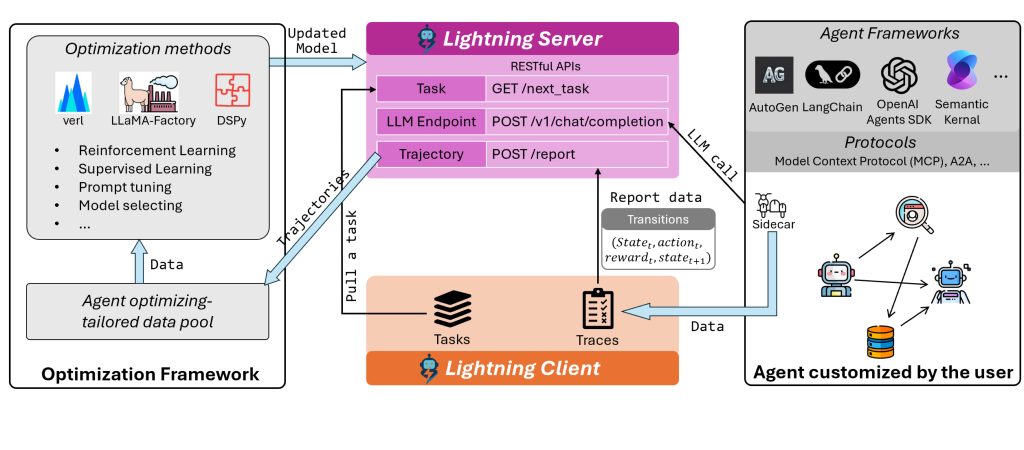

Microsoft Agent Lightning has introduced a new reinforcement learning framework designed to enable AI systems to learn from experience without causing system instability. Previously, improving an AI agent required significant system rebuilding for training. Agent Lightning, however, monitors the AI’s performance, including its responses, tool usage, and feedback, to identify areas for self-improvement.

This process effectively gives the AI a memory of its successes and failures, allowing for more intelligent self-training. The framework operates without interfering with existing tools or applications, utilizing a lightning server for backend training and a lightning client to collect real-world performance data.

Table of Contents

Agent Lightning Framework Details and Testing

The Microsoft Agent Lightning framework collects data on every AI interaction, such as answering queries or using tools, and sends it back to the server to refine the AI’s performance. This learning happens discreetly in the background, allowing users to continue interacting with their AI through browsers, chatbots, or existing workflows as usual.

Microsoft tested Agent Lightning in three distinct applications:

- An AI that converts natural language questions into SQL queries using the Spider dataset (over 10,000 questions).

- A retrieval system that searches and summarizes information from 21 million Wikipedia-based documents.

- An AI for solving math problems with calculator integration for step-by-step solutions.

In all these tests, the AI demonstrated significant improvement with continued training.

Advanced Features and Open-Source Availability

Agent Lightning incorporates an “automatic intermediate rewarding” system, which provides incremental feedback to the AI during training, akin to offering hints rather than just a final pass or fail grade. This feature facilitates a smoother and accelerated training process.

The framework is also open-source, allowing developers to integrate it into their projects and enhance their AI agents. In essence, Microsoft has developed a more efficient, faster, and independent method for AI systems to improve their job performance.

OpenAI’s Safety-Focused Models

In contrast to Microsoft’s focus on reinforcement learning, OpenAI has released two new models, GPoS safeguard 120B and GPOSS safeguard 20B, specifically designed for detecting harmful and fraudulent online content. These are classified as open-weight models, meaning their parameters are accessible for inspection by developers to understand their decision-making processes, but the underlying code remains unalterable.

Also Read: 10 Powerful Reasons to Try Vibe Coding in AI Studio Today

This approach provides transparency for researchers while maintaining security. The models are designed to explain the reasoning behind flagged content, rather than simply labeling it as unsafe.

Testing and Availability of OpenAI Models

The GPoS safeguard models were developed in collaboration with the Robust Open Online Safety Tools (ROOST) initiative and tested with partners like Discord and Safety Kit. Both models are now available on Hugging Face for research purposes, and OpenAI is encouraging the wider safety community to conduct stress tests.

This initiative represents a move towards measurable accountability and AI moderation, addressing calls from governments that have seen limited delivery from other entities.

Telegram Founder’s Decentralized AI Network

Pavel Durov, the founder of Telegram, has announced “Cocoon,” a confidential compute open network built on the TON blockchain. This project aims to establish a decentralized AI network that prioritizes user data privacy.

It connects individuals who own Graphics Processing Units (GPUs) with developers requiring computing power. GPU owners contribute their hardware to process AI tasks and receive payment in Toncoin, while developers use the same token to access these services, creating a peer-to-peer marketplace for computation.

Cocoon’s Privacy Features and Telegram Integration

Cocoon’s key feature is its emphasis on privacy, with all tasks processed in encrypted form, preventing even GPU providers from accessing the data they are processing.

Durov stated that Cocoon is an effort to counteract the perceived decline in digital freedoms over the past two decades. Telegram is slated to be Cocoon’s first major client, integrating the network into its applications and bot ecosystem starting in November 2025.

With over one billion users, Telegram’s adoption could accelerate the mainstream use of decentralized AI for tasks such as message summarization and draft writing, which typically involve sending user data to centralized providers.

Cocoon Network Participation and Investment

Applications are currently open for both GPU providers, who can list their hardware specifications, and developers, who can specify their model requirements and compute needs. Following the announcement, Alphon Capital, a NASDAQ-listed company, committed significant investment in GPU infrastructure to support Cocoon, with plans to deploy high-performance GPUs globally.

The company’s leadership highlighted Cocoon’s potential to address major market gaps in AI concerning privacy and security at scale. Cocoon also aligns with Kazakhstan’s AI development initiatives, following a meeting between Durov and President Kassym-Jomart Tokayev regarding a potential Telegram AI lab.

Toncoin Market Reaction and Ecosystem Expansion

The Toncoin price saw a slight decrease to around $2.20 with a market capitalization of $5.66 billion, though trading volume increased by 3.4%, indicating market interest.

The TON ecosystem has been expanding with features like payments, mini-apps, and NFTs, and Cocoon now adds AI computing capabilities, positioning it as a competitor to cloud computing services like AWS and Azure.

The success of Cocoon will depend on its ability to attract sufficient GPU and developer participation, with Durov’s reputation for privacy-focused technology lending credibility to the project.

Elon Musk Launches Growipedia

Elon Musk has launched Growipedia, an AI-powered encyclopedia developed using his XAI model. This platform is intended to rival Wikipedia by utilizing AI for content creation and maintenance, aiming to minimize human bias.

Musk’s objective is to establish an unbiased knowledge platform that autonomously updates itself, addressing criticisms of editor bias and inconsistent accuracy often leveled against Wikipedia. Growipedia employs XAI for autonomous data processing and verification, immediately drawing comparisons to Wikipedia, with the primary distinction being Growipedia’s reliance on algorithmic objectivity versus Wikipedia’s community consensus.

Reception and Implications of Growipedia

Growipedia has received mixed reactions, with supporters viewing it as the future of factual accuracy and critics arguing that AI cannot fully grasp the nuances and context that human editors can.

Regardless of its reception, the launch signifies AI’s increasing integration into information systems. Investors and tech analysts are observing Growipedia’s development as it could redefine online information curation and verification, or at least serve as an example of AI’s potential to replace human roles in knowledge ecosystems.

Adobe’s Experimental Creative Tools at Max

At the Adobe Max 2025 sneak peek event, Adobe showcased over ten experimental tools leveraging generative AI. Project Motion Map allows for the animation of static Illustrator designs using text prompts; in a demonstration, a burger image was brought to life with individually animated layers that the system automatically detected and separated.

Project Clean Take enables direct editing of speech within a video transcript, allowing changes in tone or word replacement that naturally update the audio. This tool also isolates background sounds for adjustment or muting and can replace background music with AI-generated royalty-free tracks.

Further Adobe Innovations and YouTube’s AI Upscaling

Project Light Touch demonstrated the ability to alter lighting in photographs post-capture by manipulating virtual light sources, instantly changing shadow layouts. Project Frame Forward allows for video editing by modifying a single frame, with AI automatically applying the changes across the entire video.

These tools highlight Adobe Research’s push into generative AI for creative workflows, blending visual and temporal reasoning for a more conversational editing experience.

Separately, YouTube has begun AI upscaling videos with resolutions below 1080p to HD, with plans for 4K upscaling on TVs in the future, visible under video quality options as “Super Resolution.” Creators can opt out of this feature.

YouTube Updates and IBM’s Granite Models

YouTube has also increased thumbnail limits from 2MB to 50MB, enabling 4K thumbnails, and is introducing immersive previews for channel browsing on TV, new show layout collections for binge-watching, contextual search prioritizing on-channel results, and QR codes linking to product pages mentioned in videos.

These updates aim to enhance the TV viewing experience, making it more interactive. IBM has released Granite 4.0 Nano, a collection of eight small but powerful AI models ranging from approximately 350 million to 1 billion parameters, designed to run directly on user devices without relying on data centers.

Granite Models’ Performance and Open-Source Nature

These Nano models have been trained on the same extensive 15 trillion token dataset as IBM’s larger models, ensuring comparable capabilities. Their hybrid architecture utilizes less memory without sacrificing performance, allowing them to run on devices like laptops and phones, or within browsers via tools like VLM, Llama.cpp, or MLX.

IBM has made these models fully open-source, ISO 4201 certified, and cryptographically signed for authenticity. Comparative tests showed Granite Nano outperforming competitors like Quora, Gemma, and Llama 2 in general reasoning, math, coding, and tool-using tasks.

Nvidia Reaches $5 Trillion Market Cap

Nvidia has made history by becoming the first company to achieve a $5 trillion market capitalization, with its stock closing at $274. This valuation surpasses the GDP of countries like India, Japan, and the United Kingdom.

In just three months, since July, Nvidia’s market cap grew from $4 trillion, highlighting the immense demand for GPUs, which are essential for all AI models. Nvidia’s chips, originally designed for gaming, now power most major AI deployments globally.

Nvidia’s Strategic Investments and Future Plans

CEO Jensen Huang recently announced new chip orders totaling $500 billion, a $1 billion investment in 6G development with Nokia, and a partnership with Uber for autonomous taxis. Nvidia is also collaborating with the U.S. Department of Energy to build seven AI supercomputers and has invested $100 billion in OpenAI for data centers focused on future chatbot models.

Huang is also engaging with political figures regarding chip manufacturing and export restrictions. Despite concerns from financial institutions about a potentially overheated AI stock market, Huang maintains that the demand is driven by AI’s evolution into profitable business engines.